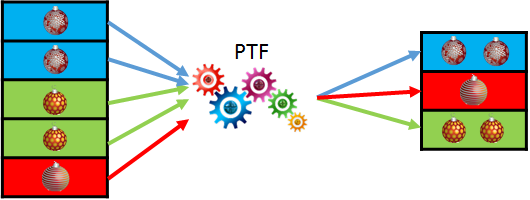

In the first three parts of the series we have seen how a PTF basically works, but we have focused on row-semantic PTF only. In this part we’ll look at the table semantic PTF. As a reminder, the main difference to row-semantic PTF is that it is not enough to look at the currently processed row to produce the result. With table semantic PTF we often also need some summarized state from the previously processed rows. They are useful to implement user defined aggregate or window functions. Let’s first try to implement a very basic example of table semantic PTF and learn more theory as we go.

What will be our task? In the second part we have previously developed a PTF to concatenate fields into a CSV-list. Let’s take this new column and summarize its length. Again, it’s very basic and doesn’t actually require any PTF, it can be simply done with SQL. We are doing it this way just to show how table semantic PTF works.

CREATE OR REPLACE PACKAGE sumlen_package AS

FUNCTION describe ( tab IN OUT dbms_tf.table_t

, cols2sum IN dbms_tf.columns_t )

RETURN dbms_tf.describe_t ;

PROCEDURE fetch_rows;

END sumlen_package;

/

CREATE OR REPLACE FUNCTION sumlen_ptf (tab TABLE, cols2sum COLUMNS )

RETURN TABLE PIPELINED TABLE POLYMORPHIC USING sumlen_package;

/

Listing 1: Package specification

There are no differences in package specification to what we’ve seen so far. We’ll be using the parameter cols2sum to pass the column(s) whose length we want to summarize. The definition of the PTF itself is where we tell the database about the semantic of the function. It is the word TABLE before the word POLYMORPHIC.

DESCRIBE

After three previous parts of this series we now have some experience as PTF developer and can guess how the implementation of DESCRIBE could look like (see Listing 2). But it is important to note that we are making some sort of a shortcut here. Our implementation of DESCRIBE assumes the list of columns can be passed with parameter cols2sum, if we wanted to aggregate multiple columns at once. That means, we cannot just return one hard coded new column. We have to define them in a loop, because we just don’t know in advance how many there will be. But this shortcut is just okay for now. I will show the more generic case in one of the next posts.

CREATE OR REPLACE PACKAGE BODY sumlen_package AS

FUNCTION describe (tab IN OUT dbms_tf.table_t

, cols2sum IN dbms_tf.columns_t )

RETURN dbms_tf.describe_t IS

BEGIN

FOR I IN 1 .. tab.COLUMN.COUNT LOOP

IF tab.COLUMN(i).description.name MEMBER OF cols2sum THEN

tab.column(i).for_read := true;

END IF;

END LOOP;

RETURN dbms_tf.describe_t(

new_columns =>

dbms_tf.columns_new_t(

1 => dbms_tf.column_metadata_t(

name => 'SUM_LEN',

TYPE => dbms_tf.type_number)));

END;

...

Listing 2: Implementation of the DESCRIBE method

FETCH_ROWS

What is to consider while implementing FETCH_ROWS? As already mentioned, you have no control over how often FETCH_ROWS is executed. The processing is done in chunks; one chunk is not larger than 1024 rows (not documented, but what I’ve seen so far). It makes sense because all the collections are kept in the PGA.

So if we have to aggregate something, we can only build a subtotal based on our actual chunk and then we need a mechanism to pass these subtotals from one execution to the next. There is a simple XSTORE interface for storing key-value pairs during the execution which we can use for our example. We are using the variable v_len to get and set the current sum (line 10 and 16) at the beginning and the end of the current execution of FETCH_ROWS.

Another possibility to handle the execution state using your own structures and the special execution ID returned by DBMS_TF.GET_XID function will be considered in later posts.

PROCEDURE fetch_rows IS

rowset dbms_tf.row_set_t;

colcnt PLS_INTEGER;

rowcnt PLS_INTEGER;

len_curr_col dbms_tf.tab_number_t;

v_len pls_integer;

v_currlen pls_integer := 0;

BEGIN

dbms_tf.get_row_set(rowset, rowcnt, colcnt);

dbms_tf.xstore_get('len', v_len);

v_currlen := nvl(v_len, 0);

FOR r IN 1..rowcnt LOOP

v_currlen := v_currlen + length (rowset(1).tab_varchar2(r));

len_curr_col(r) := v_currlen ;

END LOOP;

dbms_tf.xstore_set('len', v_len+v_currlen);

dbms_tf.put_col(1, len_curr_col);

END fetch_rows;

END sumlen_package;

/

Listing 3: Implementation of the FETCH_ROWS method

We are ready to call the function (see Listing 4). The first attempt runs against a documented restriction: it is not allowed to nest polymorphic functions. But it is very easy to workaround this using a WITH clause.

SQL> -- Nesting PTF doesn't work

SELECT *

FROM sumlen_ptf(my_ptf(scott.emp, COLUMNS(deptno))

, COLUMNS(agg_col))

ORA-62569: nested polymorphic table function is disallowed

SQL>

SQL> -- But it works via CTE (WITH clause)

SQL> WITH agg as (SELECT * FROM my_ptf(scott.emp, COLUMNS(deptno)))

2 SELECT *

3 FROM sumlen_ptf(agg , COLUMNS(agg_col));

DEPTNO AGG_COL ... SUM_LEN

---------- -----------------------------... ----------

20 7369;"SMITH";"CLERK";7902;"17... 52

30 7499;"ALLEN";"SALESMAN";7698;... 111

30 7521;"WARD";"SALESMAN";7698;"... 169

20 7566;"JONES";"MANAGER";7839;"... 224

30 7654;"MARTIN";"SALESMAN";7698... 285

30 7698;"BLAKE";"MANAGER";7839;"... 340

10 7782;"CLARK";"MANAGER";7839;"... 395

20 7788;"SCOTT";"ANALYST";7566;"... 450

10 7839;"KING";"PRESIDENT";;"17.... 502

30 7844;"TURNER";"SALESMAN";7698... 560

20 7876;"ADAMS";"CLERK";7788;"23... 613

30 7900;"JAMES";"CLERK";7698;"03... 665

20 7902;"FORD";"ANALYST";7566;"0... 719

10 7934;"MILLER";"CLERK";7782;"2... 773

14 rows selected.

Listing 4: Calling the PTF

Partitioning and Ordering

With table semantic PTF the incoming data can be optionally partitioned and/or ordered like in Listing 5. Note that this is not allowed for row semantic PTF. There are few limitations, however. You cannot simply use comma separated column lists, but need to enclose them in parenthesis. And it seems not to be possible to use any SQL expressions as in corresponding clauses of analytical functions, just simple column names.

SQL> SELECT deptno, empno, ename, sum_len

2 FROM sumlen_ptf (scott.emp PARTITION BY deptno

3 ORDER BY empno

4 , COLUMNS(ename));

DEPTNO EMPNO ENAME SUM_LEN

---------- ---------- ---------- ----------

10 7782 CLARK 5

10 7839 KING 9

10 7934 MILLER 15

20 7369 SMITH 5

20 7566 JONES 10

20 7788 SCOTT 15

20 7876 ADAMS 20

20 7902 FORD 24

30 7499 ALLEN 5

30 7521 WARD 9

30 7654 MARTIN 15

30 7698 BLAKE 20

30 7844 TURNER 26

30 7900 JAMES 31

14 rows selected.

SQL>

SQL> -- You need parenthesis for column lists in partition by or order by

SQL>

SQL> SELECT deptno, empno, ename, sum_len

2 FROM sumlen_ptf (scott.emp PARTITION BY deptno, mgr

3 ORDER BY empno

4 , COLUMNS(ename));

ORA-62555: Invalid use of PARTITION BY or ORDER BY clause.

SQL>

SQL> -- but this works

SQL> SELECT deptno, mgr, empno, ename, sum_len

2 FROM sumlen_ptf (scott.emp PARTITION BY (deptno, mgr)

3 ORDER BY empno

4 , COLUMNS(ename));

DEPTNO MGR EMPNO ENAME SUM_LEN

---------- ---------- ---------- ---------- ----------

10 7782 7934 MILLER 6

10 7839 7782 CLARK 5

10 7839 KING 4

20 7566 7788 SCOTT 5

20 7566 7902 FORD 9

20 7788 7876 ADAMS 5

20 7839 7566 JONES 5

20 7902 7369 SMITH 5

30 7698 7499 ALLEN 5

30 7698 7521 WARD 9

30 7698 7654 MARTIN 15

30 7698 7844 TURNER 21

30 7698 7900 JAMES 26

30 7839 7698 BLAKE 5

14 rows selected.

SQL>

SQL> -- No expressions possible

SQL>

SQL> SELECT deptno, empno, ename, sum_len

2 FROM sumlen_ptf (scott.emp PARTITION BY SUBSTR(deptno,1,1)

3 ORDER BY empno

4 , COLUMNS(ename));

ORA-00907: missing right parenthesis

Listing 5: Partitioning and ordering the incoming data

Note that if you partition the input, the FETCH_ROWS will be executed at least once per partition. In other words, you are always dealing with rows from one partition inside the FETCH_ROWS. But does this mean you don’t even need to know, whether or how the input was partitioned on PTF invocation? For the simplest case like our example this seems to be true. But in general I’m rather inconvenient with the fact that, inside a PTF, you don’t know what columns where referenced in a PARTITION BY or ORDER BY clause. For me, this information would be very helpful in some use cases.

Parallel execution

We haven’t looked at the parallel execution yet. Of course, SQL queries containing a polymorphic table function can be executed in parallel. For row semantic PTF the database can distribute the rows between the parallel slaves without any restrictions. On the other hand, if data is partitioned, as can be the case with table semantic functions, all rows of the same partition have to be processed by the same parallel slave. It is due to the execution states that are stored and used by consequent executions of FETCH_ROWS: we don’t want the different partition-specific states are interchanged with each other.

This also means, if we don’t partition the input of the table semantic PTF, we will actually be having a serialization point in the query.

PASS_THROUGH columns

Do PASS_THROUGH columns behave something different with table semantic PTF? According to the documentation they should. That’s what we can read:

All columns in the Row Semantics PTF are marked as pass-through by default. For Table Semantics PTF, the default value for pass-through is set to false. For the Table Semantics PTF, the partitioning columns are always pass-through and this cannot be changed by the DESCRIBE function.

But actually I couldn’t find any differences. I don’t know, whether it is a documentation or implementation bug, but it simply doesn’t work as described for me. First, we have already seen in Listing 2 that all columns were passed through, although we didn’t set this explicitly. Let’s modify the function DESCRIBE so that we set PASS_THROUGH to FALSE for all columns :

FUNCTION describe (tab IN OUT dbms_tf.table_t

, cols2sum IN dbms_tf.columns_t )

RETURN dbms_tf.describe_t IS

BEGIN

FOR I IN 1 .. tab.COLUMN.COUNT LOOP

IF tab.COLUMN(i).description.name MEMBER OF cols2sum THEN

tab.column(i).for_read := true;

END IF;

tab.column(i).pass_through := false;

END LOOP;

RETURN dbms_tf.describe_t(

new_columns =>

dbms_tf.columns_new_t(

1 => dbms_tf.column_metadata_t(

name => 'SUM_LEN',

TYPE => dbms_tf.type_number)));

END;

...

SQL> SELECT *

2 FROM sumlen_ptf (scott.emp PARTITION BY deptno

3 ORDER BY empno

4 , COLUMNS(ename));

SUM_LEN

----------

5

9

15

5

10

15

20

24

5

9

15

20

26

31

14 rows selected.

Listing 6: Partitioning and ordering the incoming data

So we could set all columns including the partitioning column DEPTNO to be no PASS_THROUGH. Doesn’t make very much sense for me.

Aggregate functions

The PTF from our example behaves like analytical or window function. But could we build the real aggregate function? The one, that returns only one value (row) per table/partition? Let’s try to emulate the simple function SUM() as an example. The idea is to use the row replication to remove all rows but one, which will be used to output the aggregation result.

-- What we want to emulate

SQL> select sum(sal) from scott.emp;

SUM(SAL)

----------

29025

CREATE OR REPLACE PACKAGE emulate_sum_pkg AS

FUNCTION describe(tab IN OUT DBMS_TF.TABLE_T,

agg_col IN DBMS_TF.COLUMNS_T)

RETURN DBMS_TF.DESCRIBE_T;

PROCEDURE fetch_rows;

END emulate_sum_pkg;

/

Package created.

CREATE OR REPLACE PACKAGE BODY emulate_sum_pkg AS

FUNCTION describe(tab IN OUT DBMS_TF.TABLE_T,

agg_col IN DBMS_TF.COLUMNS_T)

RETURN DBMS_TF.DESCRIBE_T

AS

BEGIN

-- No pass-through columns

FOR I IN 1 .. tab.COLUMN.COUNT LOOP

tab.column(i).pass_through := false;

IF tab.COLUMN(i).description.name MEMBER OF agg_col THEN

TAB.COLUMN(i).for_read := TRUE;

END IF;

END LOOP;

RETURN DBMS_TF.DESCRIBE_T(new_columns =>

dbms_tf.columns_new_t( 1 =>

dbms_tf.column_metadata_t(name => 'SUM_COL' ,

TYPE => dbms_tf.type_number))

, row_replication=>true);

END;

PROCEDURE fetch_rows IS

inp_rs DBMS_TF.row_set_t;

env dbms_tf.env_t := dbms_tf.get_env();

colcnt PLS_INTEGER;

rowcnt PLS_INTEGER;

repfac dbms_tf.tab_naturaln_t;

sumcol dbms_tf.tab_number_t;

sumcurr number;

BEGIN

DBMS_TF.get_row_set(inp_rs, rowcnt, colcnt);

IF NOT dbms_tf.xstore_exists('sumcurr') THEN

dbms_tf.xstore_set('sumcurr',0);

sumcurr := 0;

ELSE

dbms_tf.xstore_get('sumcurr', sumcurr);

END IF;

FOR i IN 1 .. rowcnt LOOP

-- Actually we should only leave one row in the last rowset,

-- after having summarized ALL data

-- Not knowing whether the row set is the last one,

-- we have to do this for every rowset

-- Eliminate all rows except the first

IF i = 1 THEN

repfac(i) := 1;

ELSE

repfac(i) := 0;

END IF;

sumcurr := sumcurr + inp_rs(1).tab_number(i);

END LOOP;

dbms_tf.xstore_set('sumcurr',sumcurr);

inp_rs(1).tab_number(1) := sumcurr;

dbms_tf.row_replication(replication_factor => repfac);

dbms_tf.trace('Rows in rowset: '||env.row_count);

dbms_tf.put_row_set(inp_rs, repfac);

END;

END emulate_sum_pkg;

/

Package Body created.

CREATE OR REPLACE FUNCTION emulate_sum (tab TABLE, agg_col COLUMNS )

RETURN TABLE PIPELINED table POLYMORPHIC USING emulate_sum_pkg;

/

Function created.

SQL> SELECT *

2 FROM emulate_sum(scott.emp, COLUMNS(sal));

SUM_COL

----------

29025

Listing 7: EMULATE_SUM aggregate function

Seems to work, doesn’t it? It does, but only as long as we have very small data sets so that FETCH_ROWS will be executed only once. The problem is, you should eliminate all rows and just do the desired aggregation for all rowsets, except for the last one. But you don’t know whether the active rowset is the last one. So we are doing that thing for all rowsets.

Look what happens using a data set containing more than 1024 rows (Listing 8):

SQL> WITH emp_big AS (SELECT * 2 FROM scott.emp 3 CROSS JOIN 4 (SELECT 1 5 FROM dual 6 CONNECT BY level <=100)) 7 SELECT * 8 FROM emulate_sum(emp_big, COLUMNS(sal)); SUM_COL ---------- 2121225 2902500 Rows in rowset: 1024 Rows in rowset: 376

Listing 8: EMULATE_SUM on a bigger data set

In fact we are returning the sum once per rowset, but only the last result is correct and is actually needed. For me it is a significant limitation of the table semantic PTF at the moment.

What’s next

This was the last post in 2018. Of course, next year I will continue to investigate the polymorphic table functions. Stay tuned! Hoping that PTF will become more mature in 2019!

Merry Christmas and Happy New Year 2019!

Hello Andrej,

First of all, I wish you once again a HAPPY NEW YEAR 2019 and I’d like to thank you once again

for your excellent posts, each one a PIECE OF CAKE 🙂

Your help in deepening our understanding of the PTF topic is invaluable 🙂

I have a small question related to the procedure FETCH_ROWS from package SUMLEN_PACKAGE:

It looks to me that instead of:

dbms_tf.xstore_set(‘len’, v_len+v_currlen);

we should have

dbms_tf.xstore_set(‘len’, v_currlen);

Trying to perform a check to make sure of this, I tried to execute the function for a BIG_EMP

table, like in your 2nd package example, and got the error below:

WITH emp_big AS (SELECT * FROM scott.emp CROSS JOIN (SELECT 1 FROM dual CONNECT BY level <=100) ) SELECT deptno, empno, ename, sum_len FROM sumlen_ptf (emp_big , COLUMNS(ename)) / ORA-01426: numeric overflowIf I perform the code change above, then this SELECT works without error.

Regardless of the logical correctness of one code version or the other,

I simply wonder what is the cause of the above error.

Usually this error is raised on an attempt to assign a too big value to a PLS_INTEGER variable.

But, the emp_big has 1400 rows, so, as by my understanding, it performs the FETCH_ROWS twice

( like the FETCH_ROWS of the 2nd package, emulate_sum ).

Also, v_len itself is NULL before the first call to DBMS_TF.xstore_set,

so, in fact, the value that it tries to store is NULL.

And, anyway, I still cannot see how could this cause the PLS_INTEGER range limit to be exceeded,

considering that the final value of SUM_LEN is 7000.

I also tried to add a DBMS_TF.TRACE call and use a smaller emp_big, with 14 * 70 rows instead of 14 * 100,

and in this case we do indeed have a single call to FETCH_ROWS.

Thank you lots of times once again & Best Regards,

Iudith Mentzel

Haifa, Israel

Hello Judith!

Thank you for your comment! And yes, you are right.

It should be

dbms_tf.xstore_set(‘len’, v_currlen);

I was so focused on how the PTF works that I didn’t really think through the simplest logic. 😉

In fact, we don’t need the second variable at all:

PROCEDURE fetch_rows IS rowset dbms_tf.row_set_t; colcnt PLS_INTEGER; rowcnt PLS_INTEGER; len_curr_col dbms_tf.tab_number_t; v_currlen NUMBER := 0; BEGIN dbms_tf.get_row_set(rowset, rowcnt, colcnt); dbms_tf.xstore_get('len', v_currlen); v_currlen := nvl(v_currlen, 0); FOR r IN 1..rowcnt LOOP v_currlen := v_currlen + length (rowset(1).tab_varchar2(r)); len_curr_col(r) := v_currlen ; END LOOP; dbms_tf.xstore_set('len',v_currlen); dbms_tf.put_col(1, len_curr_col); END fetch_rows;I don’t exactly understand the reason for numeric overflow. But it happens with XSTORE_GET in the second chunk and not with XSTORE_SET in the first one, where I’ve tried to store NULL (you are right again 😉 ).

Storing NULL was no problem, but getting NULL into PLS_INTEGER was one! I tested this scenario with a variable of type NUMBER and it works without exception! So I changed the variable type to NUMBER altogether.

Best Regards

Andrej

Andrej,

thank you very much for this beautiful series of PTF. I’ve learned a lot before reading the whole documentation. I also make a simple implementation for myself: http://mustafakalayci.me/2020/11/02/which-column-has-changed-in-every-row/

I was always asked what changed for every row in a log data and finally I have proper solution 🙂 I would be glad if you look at too (thanks to Chris Saxon, he looked at and guided me about 1024 rows chunk for fetched_rows procedure).

wish you a healthy days.