SQL Macros – Game Changing Feature for SQL Developers?

The fear of complex, hard-to-read SQL queries often leads developers to break them down using countless PL/SQL functions. Sounds familiar and like best practice for you? However, you often have significant performance drops caused by well-known context switches between SQL and PL/SQL. Even worse: the business logic hidden in PL/SQL functions remains invisible to the Query Optimizer!

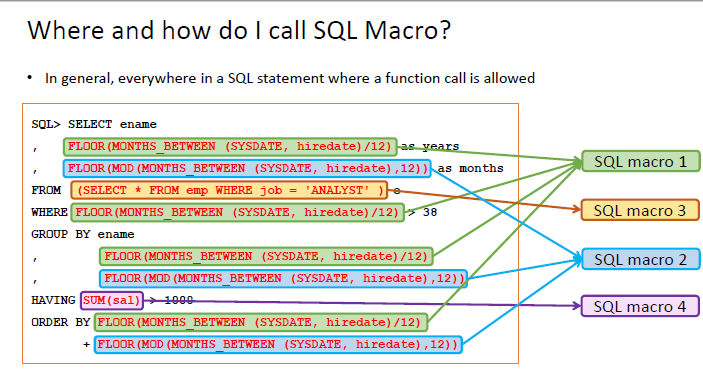

With SQL Macros – a new feature in Oracle 20c – the developer no longer has to make the difficult choice between modular, clear code and good performance. The idea behind the new PL/SQL functions is that they are not executed at runtime (no context switch!), but return SQL snippets that are incorporated into the main query just before parsing. This allows to encapsulate the logic while remaining fully transparent to the optimizer.

Polymorphic Table Functions in 18c

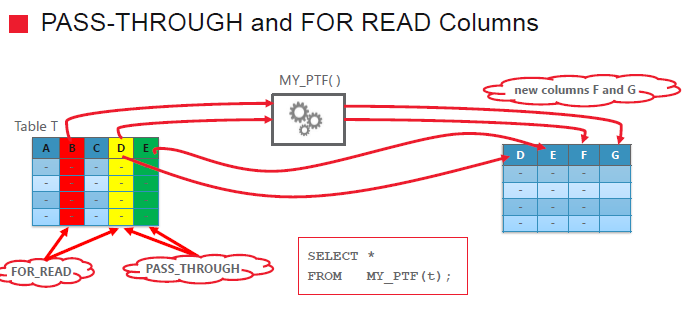

SQL has many powerful techniques to analyze your data right out of the box, but remains extensible even if you’re missing something. In Oracle 18c, you can do it much easier with polymorphic table functions (PTF). As an evolution of table functions, PTF is called in the FROM clause and is capable to encapsulate the custom processing of the input data, whereas the type of the input row need not be known at design time and the type of the output row may be determined at runtime based on the actual PTF invocation parameters.

Based on simple examples, this session will give you an introduction to the main concepts and the infrastructure behind the PTF. Discover how you can develop your own flexible and self-describing extensions while focusing on business logic and leaving complex things like parallel execution to the database.

Online Statistics Gathering for ETL

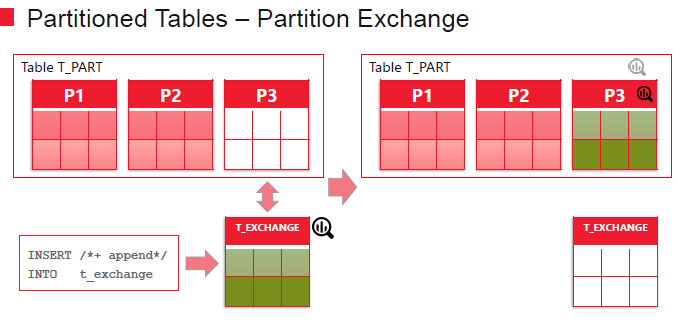

Online Statistics Gathering for Bulk Loads – the official name of the feature – was introduced in Oracle 12.1. The idea is to gather optimizer statistics “on the fly” for direct path loads. Sounds good for ETL? In certain scenarios it makes sense but even then there are many points to consider so that it becomes a reliable part of your ETL processes. When exactly will it be working and when not? Do you prevent it yourself? Documented, undocumented cases, known bugs. Which statistics are gathered and which are not? What has to be considered with partitioned tables? Interval partitioning – special case?

Properly Use Parallel DML for ETL

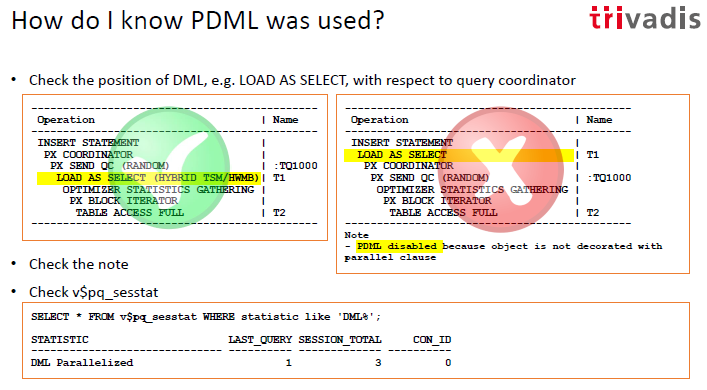

It is no secret that for high-performance ETL processes, not only queries but also write operations should be parallelized. But when you make use of it, is it simply “switch on and forget”? What do you have to consider? Can it also have negative effects? After a short reminder on how it works (including space management methods), some patterns are presented that have been noticed in several ETL review and tuning projects and help to find the answers to the following questions: What is the interaction between PDML and partitioning of the target table? Can PDML lead to increased fragmentation of the tablespace? Can you control it? How does the Hint PQ_DISTRIBUTE help?

MERGE SQL Statement: Lesser Known Facets

Introduced in Oracle 9i, the MERGE SQL statement is definitely no longer a new feature. But sometimes its behavior cannot be simply deduced from the knowledge about INSERT and UPDATE. There are some special cases which should be considered.

In this presentation some less known facets will be highlighted, for example:

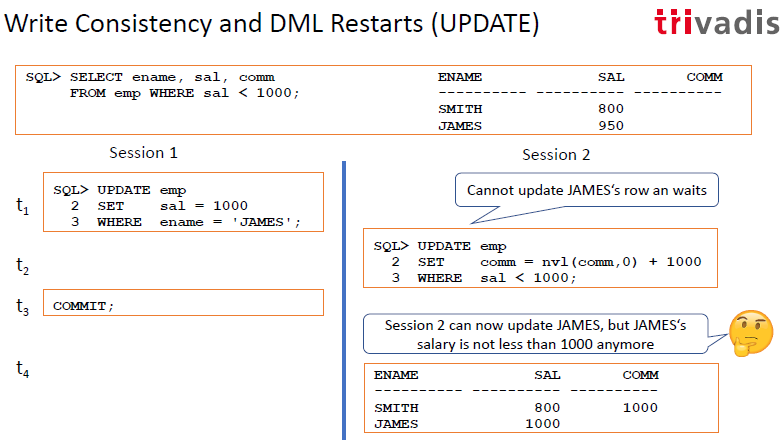

- How does a MERGE behave with regarding write consistency and what write consistency is all about. What are statement restarts?

- What is ORA-30926? Why does it take much longer if this error occurs?

- I am not allowed to update a column that was used in ON condition (ORA-38104) What if I have to?

- Parallel DML, Direct Path Loads: what do I have to consider?

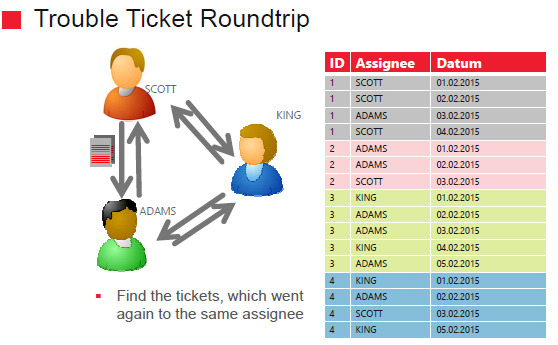

SQL Pattern Matching – should I start using it?

Introduced in Oracle Database 12c, the new MATCH_RECOGNIZE clause allows pattern matching across rows and is often associated with Big Data, complex event processing, etc. Should SQL developers who are not (yet) faced with such tasks ignore it? No way! The new feature is powerful enough to simplify a lot of day-to-day tasks and to solve them in a new, simple and efficient way. The insight into a new syntax is given based on common examples, as finding gaps, merging temporal intervals or grouping on fuzzy criteria. Providing more straightforward approach for solving known problems, the new functionality is worth to be a part of every developer’s toolbox.