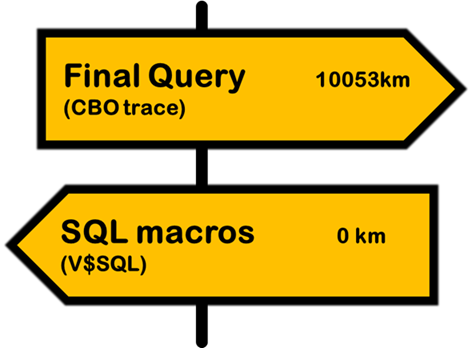

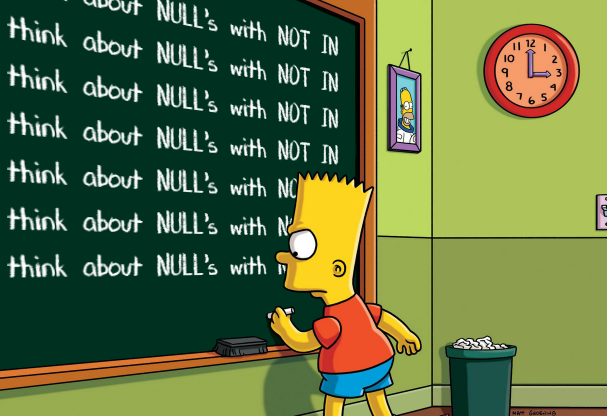

One of the most mentioned use cases for table SQL macros is the implementation, which works like a parameterized view. My first post on this is from 2020 and it ends with a thought that we have many advantages here but no real view at all. And indeed, I have heard this argument several times since then. Views are schema objects that we have been familiar with for decades, they are very easy to develop and deploy, and their query code is easily accessible via data dictionary. On the other hand, maintaining query code within SQL macros can be a bit more difficult and understandably not to everyone’s preference. But what if you could have a cake and eat it too? Here is how…

Continue reading